Imagine machines that can see, understand, and make decisions in real time. TTControl’s Emerging Technologies team makes that vision a reality.

We help you integrate AI-powered perception, sensor fusion, and autonomous assistance into your mobile machinery—so you can deliver smarter, safer, and more efficient machines to your customers.

What You Gain

Smarter Machines, Ready for the Field

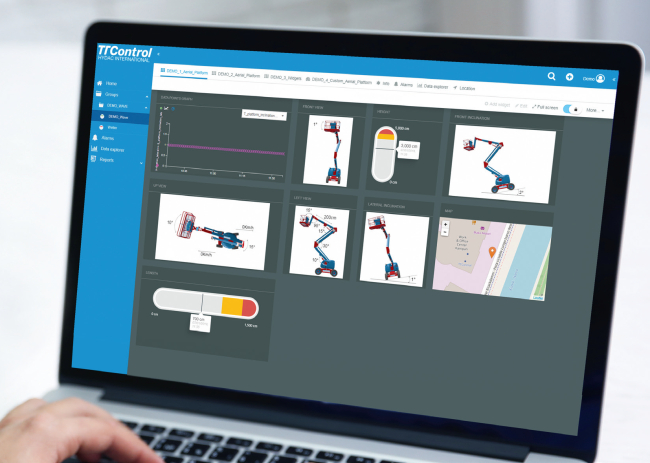

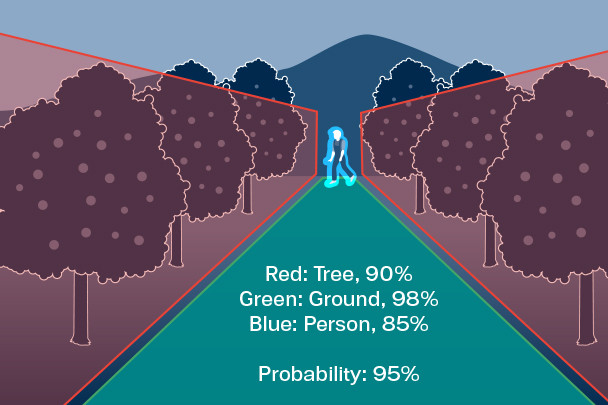

Equip your machines with real-time object detection, depth sensing, and drivable area recognition—powered by AI and tailored to your environment. Our frameworks support multi-modal sensors (Radar, Lidar, Camera) and are highly portable between TTControl hardware platforms. The framework is based on ROS 2.0 and makes optimal use of the Hailo AI accelerator of our Fusion. The workflow for creating new applications and adapt the models to the customer need is based on Azure MLOps.

A Flexible, Customizable Framework

You get a fully open and adaptable perception framework that works with your choice of sensors (Radar, Lidar, Camera) and compute platforms (Hailo, NVIDIA, x86). Our architecture supports several sensor combinations and is easily extendable to semantic segmentation and drivable area recognition use cases.

Continuous Improvement, Built In

With our Azure-based MLOps pipeline, you can continuously improve your AI models—collecting data, retraining, and deploying updates with ease. Local development environments are shipped as Docker, supporting configuration, customization, and diagnostics.

Seamless Integration

Our modular architecture ensures smooth integration with your existing ECUs, HMIs, and connectivity systems—including third-party products.

Built for OEMs Who Want to Lead

Whether you're exploring autonomous features or adding operator assistance functions, Emerging Technologies gives you:

- A head start on autonomy

- Reduced development time and cost

- A competitive edge in your market

Join the Innovation Network

In the Autonomous Operations Cluster, we collaborate with leading OEMs like Ammann, Palfinger, Prinoth, and Rosenbauer to co-develop reusable technologies that drive the future of autonomy. We pool resources and establish a cross-industry network for know-how sharing and reduced development times, focusing on robust hardware, modular software, and customized application functions.

Application examples

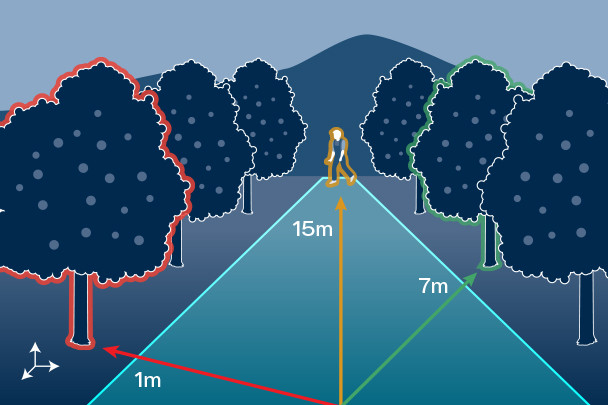

Obstacle Detection

The system identifies an obstacle on the way and warns the operator

Collision Avoidance

The system identifies a potential obstacle for the machine and calculates a safer route

Row Guidance

The system autonomously steers the machine in a defined off-road environment

Key competences

Environment perception

Multiple sensor data acquisition, time & space synchronization and data fusion increase the accuracy and reliability of your system.

Artificial Intelligence

Object detection and classification allow for target selection, identification of potential obstacles and optimization of work processes.

Comprehensive approach

The system-level approach defines requirements for the entire application including hardware platform, sensors and algorithms. It ensures a strong partnership along the entire development phase from prototyping to market introduction.

Get in touch with our sales representatives for receiving further details at products@ttcontrol.com.